Want do to something useful? Something that (slightly) improves your security in the internet? Want some internet cookies? Then please deploy CAA.

What is CAA?

CAA means Certificate Authority Authorization. In its essence, it’s just a DNS record. You know, just like those A records that resolve names into IP addresses, or MX records that list your mailserver(s). This DNS record tells other people which Certificate Authorities (CA) are allowed to issue certificates for your domain – nothing more, nothing less.

Why is that important? Who cares about this?

I do 🙂 . No seriously, it’s a useful thing, even though it may not seem like this on first glance. A CAA record on your domain improves the security of your domain. That is because by default every CA in the entire world can issue a certificate for your domain. That isn’t ideal, because there are more than 100 companies operating one or more root certificate authorities and much more subordinate Certificate Authorities, probably well over a thousand. Every one of these can in theory go rogue and forge a certificate for your domain(s). Are all of these thousand CA’s equally thrustworthy and secure? Well, from a browser security standpoint all of these are considered “trusted”, so everything signed by them will get a green lock in your browser (or any other application using TLS). Imagine just one of them getting hacked: Even if you are not a customer of them, they can still attack your website. You can detect such attacks using The Power Of Certificate Transparency, but preventing is better than detecting, right?1

With CAA, you can decide which CA you trust. If you only use a single CA, you can tell the entire world “I only trust them, no one else may issue a certificate for me”. That’s what CAA does. The DNS record prevents anyone else from issuing a certificate for your domain. Deploying CAA can be an important difference when a CA gets hacked or goes rogue for some reason.

How does this work on a technical side? How does a DNS record prevent someone from issuing? Can’t a rogue CA just ignore the DNS record?

Yes, they can in theory. However that would be really, really bad for them. CAA is mandatory for every single publicly trusted CA since 2017. No CA is allowed to simply “ignore” a CAA record2. It must respect the record – failing to do so might cause the CA to loose it’s publicy trusted status. CAA is like a law: Failing to follow the law will likely lead to punishment. That is why it’s important to deploy CAA: If no one deploys it, there’s no benefit in having it. It’s also possible to automatically monitor whether a certificate issuance was legal according to CAA (by using the power of Certificate Transparency and checking against the CAA record) and immediatly notify authorities if a mis-issuance happens.

Okay nice, now how do I go about deploying it?

First of all, we should talk about how CAA checking works from a CA point of view. The CA climbs the DNS domain names up to the TLD and checks each name for a valid CAA record. Let me explain it by example:

If we wanted to issue a certificate for blog.germancoding.com, the CA would first check for a CAA record at blog.germancoding.com. If no CAA record is found, the CA queries germancoding.com for a CAA record. Finally, if that was unsuccesfull too, the CA queries the TLD .com (yes, that’s technically also a domain3) for a record. If there isn’t anything either, the CA can consider that there isn’t any CAA record, so issuance is allowed by everyone (allow-by-default in this case). There are edge cases like when there’s a CName record involved, but this upwards climbing is the basic principle. CAA checking is stopped upon the first match, so a CAA record on blog.germancoding.com overrides one on germancoding.com.

What I’m trying to say here is that for most people a single CAA record on the main domain is enough – subdomains are covered automatically. Records on subdomains are only needed if you want to override something, for example:

“I only want Let’s Encrypt to issue for me, but on a single subdomain only I will additionally allow Sectigo” – this is easily doable due to the climbing-up principle explained above.

The actual record may look like this:

germancoding.com. IN CAA 0 issue "letsencrypt.org"I think this format is called the Bind Zone Format, due to Bind’s popularity it’s seen everywhere (and due to the fact that it’s a standard). This means that there is a DNS record on germancoding.com which is of type CAA – CAA Records have their own type, which is kinda special these days – most protocols scramble data into DNS using a TXT record. The actual “data” of the Record is

0 issue “letsencrypt.org”

The first 0 is a flag. This flag is currently “reserved for future use” and should simply be set to zero (the actual definition of the flag is a bit more complicated, there’s already the “critical bit”, but let’s not blow up this post any more than neccessary).

The second part, “issue” describes what action we want to do (the definition calls this a “tag”): Currently defined tags are issue, issuewild, iodef, contactemail, contactphone. Most of these (iodef, contact*) are used for (real-time) reporting of CAA violations or issues. That isn’t really neccessary to implement, the issue/issuewild tags are the important ones.

The difference between issue and issuewild is that issue controls general issuance of certificates and issuewild controls the issuance of wildcard certificates. If no issuewild is present, issue takes over and also controls the issue of wildcards, so you do not need issuewild, unless you want special treatment for wildcard certificates.

The last part is the name of the certificate authority. The format here isn’t set in stone, each CA can name themselves, so you must check your CA documentation which name they use for their CAA checking. Most CA’s I’ve seen use their primary domain name, which is what is shown in my example (my example would be valid for Let’s Encrypt).

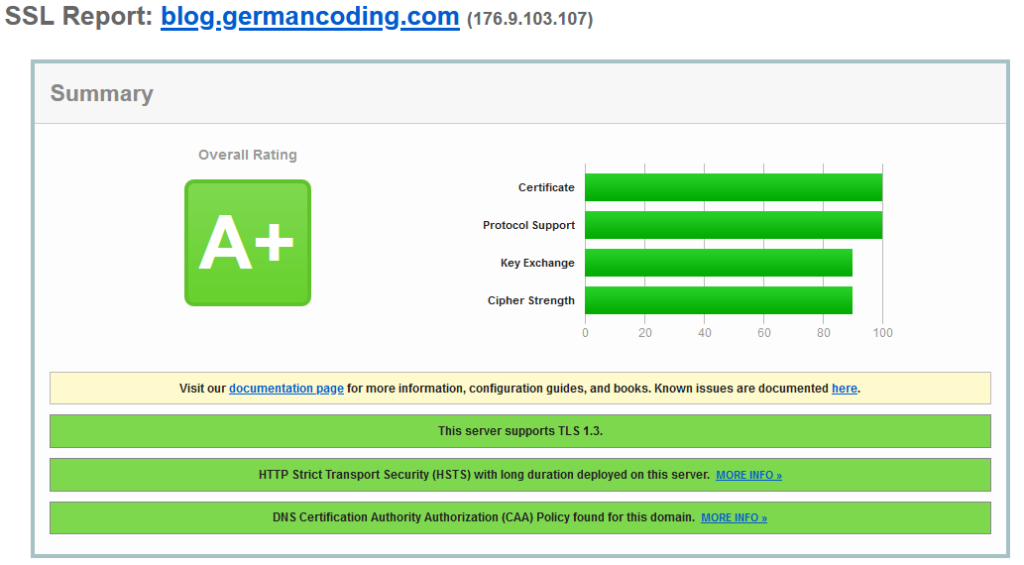

Once you have deployed this, you can use a TLS checker (like the one from Qualys) to see how it looks in the real world (you can also do a “dig CAA example.net” from a suitable box that has the dnsutils command). If you want to double check that everything works as intended, issue a certificate from your designated CA’s and see if you’re getting errors – if so, your CAA is too strict or incorrectly set and doesn’t allow issuance.

Excursion for specialists: The RFC 8657 CAA extension

The DNS CAA standard was recently (November 2019) expanded. The definition of what is new is documented in RFC 8657.

This extension is especially interesting for CA’s that use the ACME protocol (like Let’s Encrypt). With this extension, you have more fine-grained access control how certificates can be issued for your domain. For example if you’re familiar with the ACME protocol, you know that there are multiple challenges, e.g the DNS-01 and the HTTP-01 challenge (+ the forgotten ALPN challenge). With the extension from the RFC, you can define which challenges are allowed on your domains. You could, for example, only allow the DNS challenge. This would prevent someone who has the ability to re-reoute traffic to get certificates from Let’s Encrypt using the HTTP-01 challenge even when there’s a CAA record only allowing Let’s Encrypt. This obviously means that you must use the challenges allowed by your DNS CAA record yourself. An example of the extension in action can be seen on my main domain, germancoding.com:

# dig CAA germancoding.com

;; ANSWER SECTION:

germancoding.com. 3600 IN CAA 0 issue "letsencrypt.org; validationmethods=dns-01"As you can see, we can place a semicolon after the name of your allowed CA. After the semicolon we can set parameters, like the allowed challenges. The CA will disallow any challenge not listed in the CAA record, if the parameter is present.

There’s more than just the validationmethods. You can also bind to a specific account URI (e.g the account number or name you have at your CA), so that only accounts under your control can issue certificates – no one can create a new account at the same CA and use that for issuance, if you set that parameter.

Please note that this standard is too new to be supported everywhere. First of all, most CA’s do not use the ACME protocol and therefore things like validationmethods usually have no meaning to them. Secondly, even our ACME exemplary CA Let’s Encrypt doesn’t support the new extension yet in production. Nevertheless, you can still deploy it today on your domains, as support will be there in the future, I’m pretty sure – Let’s Encrypt already has it in staging and it’s only a matter of time until it’s deployed in production too.

Update: After many years, Let’s Encrypt enabled this feature in production in December 2022.